Dripping Faucets and Memory Leaks

All Java developers will need to deal with memory leaks at some point in their careers. There are already numerous blog posts on the internet about developers' experiences. I figured I wold just add another, which discusses the trial and tribulations of my latest ordeal with a leaky faucet.

We encountered a situation where a long running application server infrastructure all of sudden started to experience sever unreliability issues. The application servers were starting to crash numerous times a day. The application server was Oracle's old OC4J J2EE container, however that is really irrelevant in this case. The application change would have caused issues on any application server platforms.

Initial Pipe Tightening

This particular matter was made a little more convoluted by the fact that there are actually multiple applications running in the same container. One of the applications was a historical memory hungry application which caused our infrastructure team to go down an errant path. They were watching process memory size and had concluded that we had reached a volume where our heap would eventually be exhausted and cause the container to crash. They started brainstorming on how they could lower the memory requirements on the server. They introduced two changes in attempt to help alleviate the situation: 1) they increased the max heap size another 256MB 2) they decided to try reducing the session timeout from 20 minutes to 5 minutes. You can easily deduce the rationale behind the 1st change. The thought behind the second change was a result of the fact that the environment we were dealing with was a service platform and there was no state being maintained in the application session.Well these changes just mad matters worse. The first change ended up just increasing the CPU processes as the Garbage Collector (GC) started working a lot harder, and the session clean up processes just added more load. It obviously missed the target and the pipes were still leaking.

Call the Plumber

The tools available in the base JDK and the free tooling that is available on the web have really improved over the years. It has certainly become easier to start diagnosing issues, but it still is always difficult to find and batch the exact leak point.Step 1: Water Test

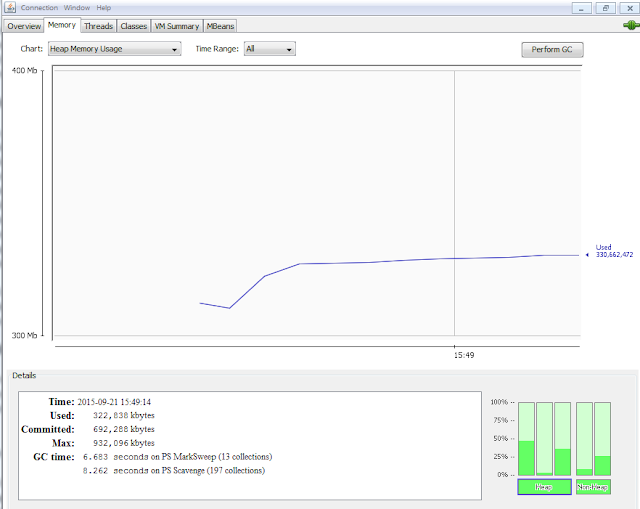

The first step taken was to get jconsole (available in base SDK since Java 5) up and runningOnce connected with jconsole you can start to review all sorts of trending information in regards to memory utilization, class loading, threads and CPU utilization.

After reviewing the graphing for awhile, I started to focus in on the memory tab. It became apparent pretty quickly that yes the heap was growing close to the max but would recover nicely once the GC ran. However I noticed that the non-heap "permgen" memory would just grow and grow and grow. Once it would near or hit the max permgen, configured as 256MB on our application servers, the server would crash.

Step 2: Flush the Dirty Water

At this point I started to suspect that there was some issue going on with the class loading and unloading. In other words I was suspicious of a classloader leak. I first tried to use jmap (another SDK tool) to dump the heap and permgen stats, but every time I tried to connect to the JVM process it would result in the VM crashing. Therefore I fell back to jconsole and used the jmx option to dump the heap. Look at the image below to determine the location of the option. The first argument specifies the name of the file to be created and the second argument indicates whether you want a garbage collection to be run before the heap dump is created.Step 3: Send off to the Lab

I then used the the Eclipse Memory Analyzer (MAT) to start looking at the heap dump. Upon launching the MAT you get prompted whether you would like to run the Memory Leak report. You can see a snippet of the results which were produced by running that report. See output below:The top suspect highlighted in the report re-enforced the possibility that we were actually experiencing a classloader leak. Note: the MAT tool is very powerful. It is worth spending some time with available tutorials to figure out everything you can do within the tool.

Step 4: Pressure Test

Next was to spend some time watching what was actually going on with the class loading to see if I could pinpoint the problematic spot in the code. In order to do that I simply turned on class loading tracing on the JVM. In this case, this would be the JVM used to run the application server. The information will be written to the stdout. Below are the two JVM options that were added to the start script:-XX:+TraceClassLoading

-XX:+TraceClassUnloading

After the server was restarted and we watched activity in the production environment a clear pattern was able to be detected. There were classes being created from JAXB and the same derived classes were being loaded over and over again. Also it appeared that these classes were never being unloaded. The entries in the log would look like something below with actual package, class, method names inserted into the placeholders below.

[Loaded <package_name>.<class_name>$JaxbAccessorM ...<method_name>]

Step 5: Evaluation

With a bit of reading and searching the web there were numerous mentions about issues with JAXB either from bugs that caused memory leaks or misuse of JABContext.newInstance(). The later essentially indicating that use of newInstance() results in creating a new JAXBContext on the existing classloader and can result in numerous copies of classes being loaded.Step 6: Resolution

Evaluating the code at hand we were able to pinpoint a case where a new JAXB Context was being created with each call to a specific method. This aligned perfectly with the duplicate class loading messages we were seeing in the stdout with the class load tracing on. The code was modified to use the same JAXB Context. You could accomplish this with a static initializer, singleton pattern or injection of a JAXB Context.Once modified the crashes subsided!

Comments

Post a Comment